I have come across a few good examples of the Producer-Consumer pattern, however they tend to use contrived examples that don’t correlate well to a real world example. I realise that the examples are generally contrived for the sake of brevity and in order to keep them clear and concise. I admit that the example code that I have produced for this blog post is also contrived, nonetheless I have tried to make it resemble a real world implementation of the pattern. In order to achieve this I have ensured that the concurrent elements of both the Producer and Consumer aren’t completely self contained (i.e. a loop producing a fixed amount of elements to be consumed).

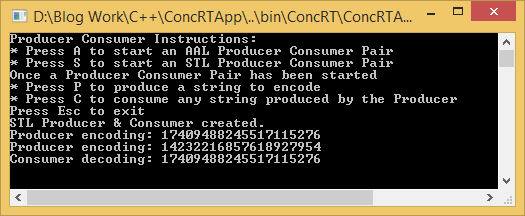

In this blog post I will compare two implementations of the Producer-Consumer pattern in C++; one using the Standard Template Library (STL) and the other using the Asynchronous Agents Library (AAL) from the Concurrency Runtime. The processes compress and decompress strings. I used a run-length encoding implementation from another project for the compression. The Producer compresses the strings and the Consumer decompresses the strings. The user will require a solid understanding of both C++ and Concurrency/Multithreading.

Producer-Consumer Pattern

Wikipedia has the best description that I can find for the Producer-Consumer pattern. Essentially it describes the solution to the requirement for synchronising the interaction between two processes. One process is producing items for another process to consume. The communication between the processes is achieved through a shared buffer, where the producer populates the buffer and the consumer removes items from the buffer. This communication needs to be controlled to ensure that only one process at a time will modify the buffer in order to maintain its integrity. In the example code the IProducer and IConsumer interfaces set the contract for both types of Producer and Consumer to fulfil.

AAL

The Asynchronous Agents Library is an actor-based programming model which uses message passing for communicating between actors. It uses the scheduling and resource management of the Concurrency Runtime (ConcRT). It provides coarse-grained control over the concurrency of an application. That is to say that you control the requests to produce and consume data, and the ConcRT schedules these tasks for you. This method of communication through message passing is an alternative synchronisation approach compared to the use of synchronisation primitives like a mutex or critical section. The main selling point of the ConcRT is that it provides the concurrent infrastructure for your application by automatically distributing the workload across the available CPUs. This frees us developers to concentrate on developing applications without having to worry too much about concurrency. The two decisions that are required when using AAL include: choosing the type of buffer to use as the message block, and choosing the message passing functions to use.

Asynchronous Message Blocks

The thread-safe message passing is facilitated through the use of message blocks. Each of these message blocks implements the Concurrency::ISource and Concurrency::ITarget interfaces. They represent the endpoints of the messaging network. The source endpoint receives messages from a sender. The target endpoint sends messages to a receiver. There are nine message blocks in total however I only used two. I used an unbounded_buffer for communicating between the Producer and Consumer. The number of sources and targets are unlimited for this type of buffer. Another benefit is that the order of the messages is maintained between sending and receiving. I also used the single_assignment message block in the Producer in order to signal the agent to finish production. I used Concurrency::concurrent_queue, a thread-safe queue, from the selection of concurrent containers in the Parallel Patterns Library for storing the data to be sent by the Producer and the data received by Consumers: This effectively behaves the same as a std::queue, but is thread-safe.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

class AALProducer final : public Concurrency::agent, virtual public IProducer { public: explicit AALProducer(Concurrency::ITarget<std::string>& targetBuffer); void produce(const std::string& stringToEncode); void stop(); protected: void run() override; private: Concurrency::ITarget<std::string>& m_targetBuffer; Concurrency::single_assignment<std::string> m_stopBuffer; Concurrency::concurrent_queue<std::string> m_stringsToEncode; }; |

Message Passing Functions

The thread-safe messages are passed using three specific functions. Concurrency::asend(…) is used to send messages asynchronously by the Producer. The non-blocking Concurrency::try_receive(…) is used by the executing Producer agent in order to check whether it should stop or not. Finally, the blocking Concurrency::receive(…) is used by the executing Consumer to receive messages sent by the Producer.

The Consumer agents blocks once started, waiting to receive data. The blocking receive(…) function accepts a timeout parameter, the value of which can be set through the overloaded consume method (defaults to infinite). This specifies a delay before the receive task times out. The Consumer agent also stops executing once it receives a particular message from the Producer:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

void AALConsumer::run() { string stringToDecode; string decodedString; try { // Blocking call - producer needs to populate the buffer before the timeout expires while ((stringToDecode = receive(m_sourceBuffer, m_timeout)) != EndMessage) { decodedString = RLE::decode<string>(stringToDecode); m_decodedStrings.push(decodedString); } } catch (const operation_timed_out&) { m_decodedStrings.push(""); } done(); } |

STL

In order to implement the pattern using STL I had to manually manage the threads for the Producer and Consumer. Running them on separate threads allows them to run concurrently and independently of each other. Also because of the multithreaded nature of the application I required a thread-safe container.

Concurrent Queue

I achieved this by wrapping the std::queue container adaptor in a class that used a std::mutex for synchronising access to the container:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

template <typename T, typename ContainerClass = std::deque<T>> class ConcurrentQueue final { public: ConcurrentQueue(); explicit ConcurrentQueue(const std::queue<T, ContainerClass>& items); void push(const T& item); // Returns a flag specifying success or failure (empty queue) as well as assigning to the reference bool try_pop(T& item); // Same as above but polls the queue until the timeout expires bool try_pop(T& item, std::size_t timeout); // Blocks until the queue is populated through the use of a condition variable T pop(); bool empty(); std::size_t size(); private: std::mutex m_mutex; std::queue<T, ContainerClass> m_items; std::condition_variable m_cv; }; |

I ended up with something similar to Just Software Solutions and Juan Chopanza apart from the use of the std::condition_variable. I was continually polling the internal queue in the pop() method, increasing the contention on the mutex by locking it each time. Following their recommendation and incorporating the std::condition_variable means that I can wait to be notified of the queue being populated:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

template <class T, class ContainerClass> T ConcurrentQueue<T, ContainerClass>::pop() { std::unique_lock<std::mutex> scopedLock(m_mutex); while (m_items.empty()) { m_cv.wait(scopedLock); } auto item = m_items.front(); m_items.pop(); return item; } |

Multithreading the Producer & Consumer

In order to manage the threading infrastructure for the Producer and Consumer, I followed Mario Konrad’s PThread tutorial using std::thread for creating the individual threads. The Thread class was used as the base class for both the Producer & Consumer. Each provides their own implementation of the abstract run() method:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

class Thread { public: Thread(); ~Thread(); void start(); void join(); protected: virtual void run() = 0; private: std::unique_ptr<std::thread> m_thread; bool m_canStart; }; |

Windows Performance Toolkit

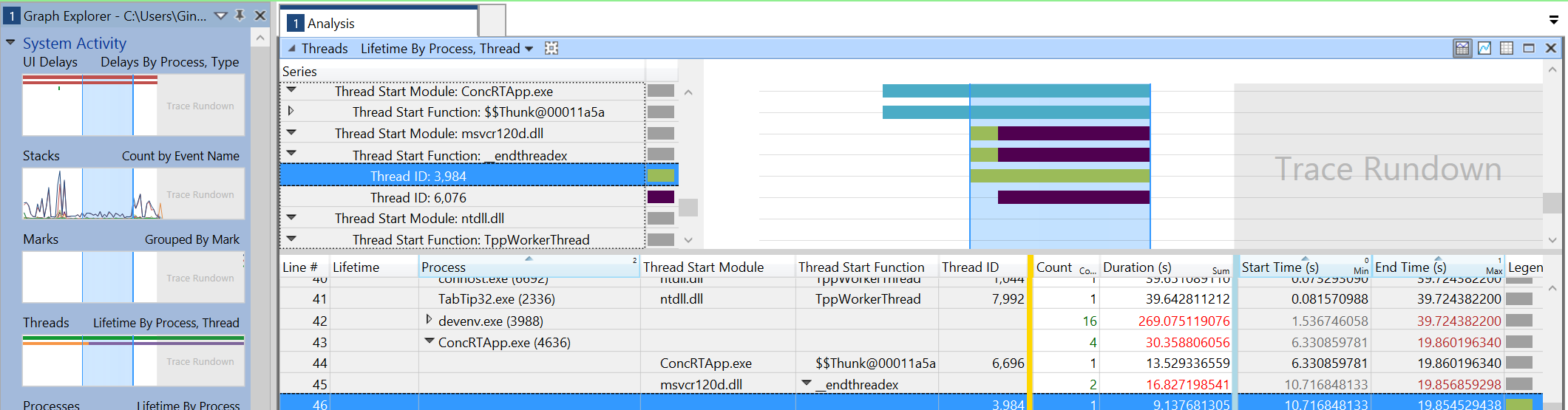

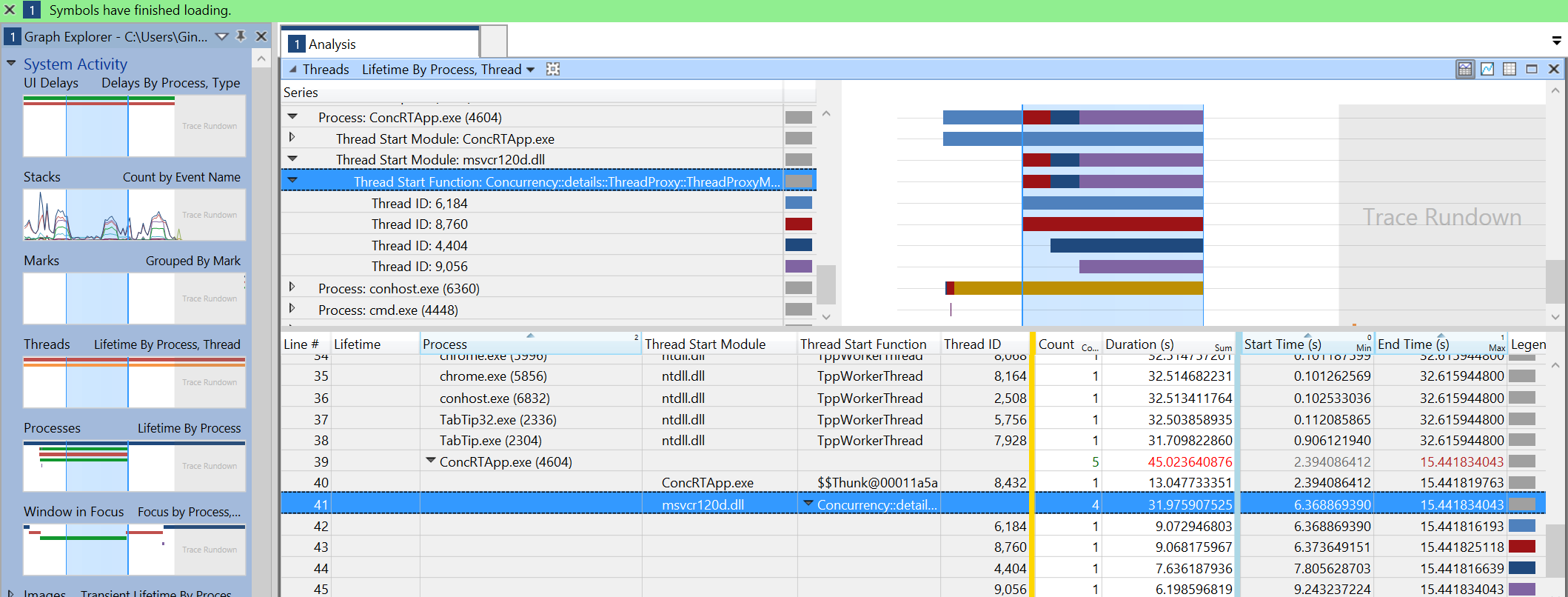

In order to get a visual representation of what the executing code was doing I decided to carry out a performance capture using the Windows Performance Recorder (WPR) from the Windows Performance Toolkit. I was then able to analyse the captured trace log in the Windows Performance Analyser (WPA). Looking at the capture involving the STL Producer and Consumer we can see the two threads that we create in STL for the Producer and Consumer to run on, by viewing the Threads graph:

However if we analyse the capture involving the AAL Producer and Consumer, we can see that there are four threads that were executing during the trace. This captures were taken on my Surface Pro 3 that has two cores which can service two threads each. This is the ‘proof in the pudding’ for the AAL. The ConcRT automatically distributed the tasks across the four available threads:

Summary

Hopefully you will find that this example implementation of the Producer-Consumer pattern simulates real world usage. At the very least it should serve as another contrived example of the Producer-Consumer pattern. The primary take-away that I have from writing this blog post and the associated code is that the ConcRT is very useful. The fact that it automatically apportions work to the available CPUs means that you can write scalable code very easily. Also because of the fact that we as developers don’t have to worry about the concurrent infrastructure, there are less things for us to do wrong and as such our code should be more reliable.

Source Code

The source code associated with this blog post is split into three projects. The ConcRT project produces a dll containing the logic. The ConcRTTest project contains all of the unit tests. The ConcRTApp project produces a console application to execute the code:

They can all be found on Bitbucket